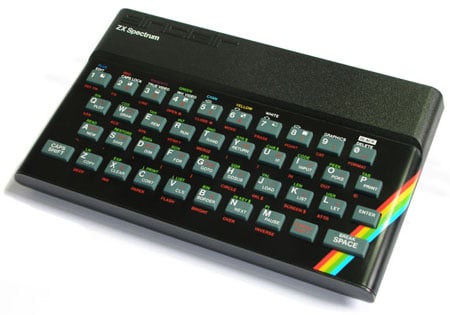

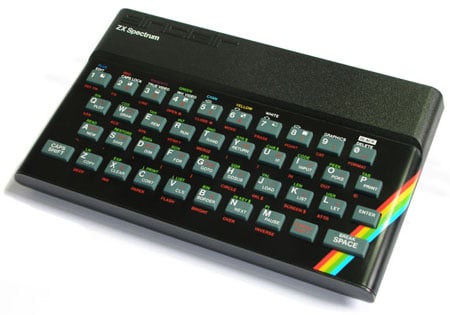

I'm old enough to remember what the world was like before there was the mouse. Or the trackball. Or the stylus. Or the touch screen. Or 3D interactive cameras that watch you (even on stand-by).

Back in the day, all we had was a keyboard. Nothing else has allowed humans to interact with computers more effectively.

|

| Mouse free since '83 |

My journey to keyboard shortcut nirvana began when I was a shy 19 year old. At the time, I worked for a telecommunications company in England. I built software for them with the new, and as yet, non-standard Java 1.1. As I was quite shy, when my mouse happened to break, I didn't speak up to the intimidating manager and ask for a replacement, but went about trying to figuring out how to use keyboard shortcuts to do everything I would have otherwise have done with a mouse.

I figured, "I'll be slower, but at least I'll be cheap".

I came to realize over the next week that I wasn't slower without a mouse. I was faster. And not just a bit faster, much faster. Strangely, with that speed came a sure-footedness that I hadn't experienced before.

It turned out that everything I needed to do on a computer (as a programmer) can be done with a combination of key presses. For example, maximize a screen: alt-space-x, restore a screen: alt-space-r, switch program: alt-tab, switch to the previous program: alt-shift-tab.

|

| Mmmmmm Conventions |

There were global shortcuts that worked on all of the windows and screens; copy: ctrl-c, paste: ctrl-v, go to the next anything: tab. I came to learn that there were also conventions for keyboard shortcuts that most programs followed; alt-f-o (or ctrl-o) to open a file, alt-f-a to save as.

And finally, there were keyboard shortcuts for all the specific pieces of software that I used to do my job; open a tab in Firefox: ctrl t, open a previously closed tab: ctrl-shift-t.

Initially, when faced with a task that I had previously done with the mouse, it took me a little while longer to figure out how to get to the right menu, select the right sub-menu and then activate the dialog to do a specific task. Once I had performed the keyboard shortcut a couple of times it became seared into my muscle memory. From that point on, all I had to do was think of what I wanted and the keyboard shortcut was performed by my hands, without me needing to think of the individual steps. That is, I don't think "alt-space-x" I think, maximize, and the screen is magically maximized.

The keyboard gives you a way to utilize muscle memory to control the computer. This is very important. Humans are great at learning new muscle skills. Walking, talking, singing, jumping, painting,

tying your shoelace.

Turns out the keyboard is the only input device that will let you use muscle memory.

There are two other interesting things about a keyboard. One is that it's operations are discrete, individually separate and distinct. That is to say, you can either have pressed a key (or combination of keys) or you haven't. There is no concept of half-pressing a key, or pressing a key hard, or the letter 'a' moving position on you.

|

| Actually, the 'a' can move on this keyboard. |

The next thing is that the operating system responsible for listening to the keyboard queues up your actions and processes them, in order, as fast as it can. I know I can type a word or perform 4 keyboard shortcuts in a row without having to know what is happening on the screen. I'm always confident that the computer will execute them in the order that I thought of them, and that the next key stroke wont take effect until the previous one completes.

That the keyboard allows you to enter discrete commands and they are queued in a trusted way, means that I can now apply whole "sentences" to my work. Just like you can think of typing the word "trusted" into a text editor - a series of characters represented by a series of muscle movements - so you can tie together sequences of shortcuts to perform complex operations.

|

| Keyboard Shortcut Mecca |

For example, in Eclipse (a programming editor), I quite often want to format the code, organize my library imports, save, and close the editor. This is ctrl-shift-f, ctrl-f-o, ctrl-s, ctrl-w. I don't actually think any of those things, I think "end" and the rest all happens with no conscious interaction. The keystrokes take about half a second, the program updates in between 1 and 5 seconds. My brain has applied a label to a complex pattern. I have shortened the muscle memory location to one place, to one word, to one feeling "end" in the context of Eclipse.

You cannot do this with the mouse, and to prove it, here's a challenge. Without looking at the screen, use a mouse and try and save your work. You can think "save" as much as you want, it isn't going to help. In the interest of scientific fairness, I tried to do this 25 times and I managed it twice. The way I did it was to maximize the program, close my eyes and then drag the mouse to the furthest top left position. Then, using the force, guessed where the file menu is, then guessed where save is. You might get it right. You probably wont. You'll probably lose work.

Even with your eyes open, saving with a mouse takes at 3 seconds; go to the file menu, find the save option, click. Ctrl-s is virtually instantaneous. How often do you save? How many three seconds of your life would you have lost if you always used the mouse?

Could you become as fast with the mouse?

No, for these reasons: Mice are variable. They accelerate and speed up so you can't be sure that your hand gesture will correlate to an exact position on screen. Mouse usage also requires the computer to tell you where the mouse cursor is so you can adjust your hand movements. In fact, as you use the mouse, you and the computer are continually communicating through your hand, the CPU and then the monitor, and then your eyes, your cortex based CPU and back to your hand again. This is a much slower and error prone process than thinking "save".

All input devices, apart from the keyboard, require this kind of human computer feedback loop to be used. This means that they are missing out on one of our must fundamental skills. Muscle memory.

Having worked with a fair number of illustrators and graphic designers, it turns out that it's not just programming where keyboard shortcuts are critical, it's in Photoshop and Illustrator too. The best graphic artists hardly seem to use the mouse. They use it for the bezier curve tool, airbrushing, and moving the page around, but nearly everything else seems to be a dazzling array of keyboard shortcuts; copy that layer, make the outer colour transparent, move it up four layers, paste it as an object, move it (using the cursor keys) up 5 pixels, etc...

The typewriter was invented in 1870. Really? 141 years of the same interface and nothing has changed? It would seem that nothing has come along that even remotely matches the keyboard's ability to allow us to interact effectively with our computer friends. Keyboards have gotten way cooler, but quite a lot of these new keyboards have lost their discrete functioning. For example, I don't know if I've pressed a key on this projected keyboard until I see the confirmation on screen.

|

| iFail |

|

| |

Put your hands in the laser beam,

it probably doesn't give you cancer. |

|

Show me a new user interface that doesn't require feedback and that can be used blindfolded.

All I can think of are the new neural integration devices. Devices that allow the computer to learn what you're thinking, and then perform associated operations. I think these devices are the only ones that can possibly match and hopefully blow past the speed of the keyboard.

However, the designers need to keep in mind that they'll only beat the keyboard if they allow, discrete, trusted queues of commands to be issued. Having to wait for a fancy three dimensional world to re-render before the next thought can be issued will make sure this interface is never taken seriously.

Have a look at the OCZ version

here